Deepfakes, or Artificial Intelligence-generated synthetic videos, have been on the crisis communications periphery since 2017.

Although many deepfake videos have circulated imitating celebrities and politicians, it may seem unclear as of yet whether communications professionals in every industry should be prepared for a reputation nosedive as a result of the harmful tech—or whether deepfakes should only be of concern to those repping the rich and famous.

Impact of deepfakes on brand reputation

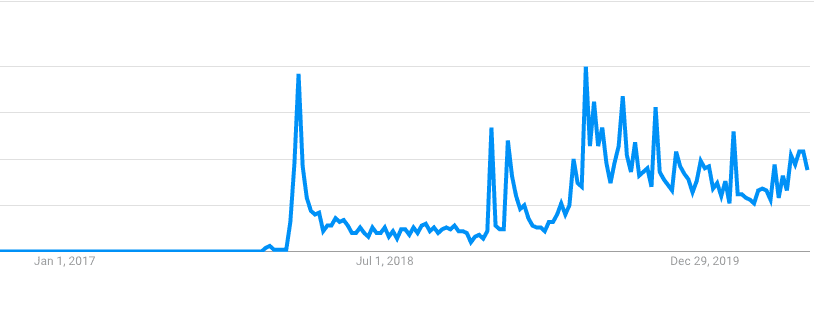

Per Google Trends, the term deepfake made its way into the mainstream in February 2018, when Pornhub and Twitter announced they would ban all videos that swapped adult actors’ faces for celebrities. And, while 90 percent of deepfakes are pornographic, according to deepfake detection software firm Deeptrace, manipulated media could easily creep its way into the corporate communications sphere.

“Given that corporate reputation can be a key influencer of share price, a leaked synthetic video or audio clip of a C-suite executive disclosing incriminating or sensitive information about a company’s sales performance or future expansion plans could be used to manipulate markets,” warns Henry Adjer, Deeptrace’s head of threat intelligence. In such an instance, communication professionals could spend months dealing with the fallout.

Initiatives to Combat the Spread

In 2019, deepfakes reentered national discourse, this time the result of a viral video of Facebook CEO Mark Zuckerberg. He was shown delivering a sinister speech about Facebook’s unchecked power. Two British artists working with advertising firm Canny produced the video. It was part of a multimedia project combining “big data, dada and conceptual art,” as per the original Instagram post.

In addition to a near-perfect reproduction of Zuckerberg’s face, machine learning technology was used to imitate the Facebook chief’s voice in the Canny video.

In the confusion that followed, Facebook, along with Microsoft and other corporations, announced initiatives to detect and remove deepfakes. The initial tool was to collaborate on creating a database of manipulated media.

“As a major platform to spread deepfakes, [it] was the right move for Facebook to mitigate some of the damage,” says Ellen Huber, an account supervisor at communications firm kglobal.

The Next Wave

Manipulated audio could well be the next wave of online fraud, with imposters accessing bank accounts or even posing as personal contacts.

In August 2019, machine learning technology was successfully used to impersonate the voice of the CEO of a U.K.-based energy firm asking for a $243,000 transfer, The Wall Street Journal reported.

Synthetic voice audio could cause significant financial and reputation damage if successful, particularly in the technology sector, according to Adjer.

“This is of particular concern if a company’s reputation is built upon a public perception of technological prowess or having highly secure business operations,” he adds.

Legal Ramifications

Communication teams should be prepared to seek legal counsel if a deepfake-triggered crisis hits.

Carolyn S. Toto, a partner in law firm Pillsbury Winthrop Shaw Pittman LLP’s intellectual property practice, says deepfakes are here to stay, “and will, unfortunately, become harder to identify as AI technology gets more sophisticated.”

Legal claims against deepfakes could range from intellectual property infringement or invasion of privacy to libel and harassment. “However, the legal precedent is sparse, and the legality of deepfakes can be complicated,” Toto cautions.

The complexity of digital media ownership can throw a wrench into the litigation process for brands that want to sue those responsible for creating or distributing deepfakes related to their companies.

Depending on where the original image was obtained, says Toto, “there might be grounds for a [copyright infringement] claim, but the owner may not always be the person whose reputation is being harmed.”

For instance, she notes, if a deepfake uses a photograph of a celebrity, the copyright owner might be the photographer, not the celebrity.

Similarly, if the image of a celebrity is synced in a video that someone else created originally, Toto says the copyright claim would belong to the owner of the orignal video content rather than the star featured.

HR Implications

In addition to chief executives and celebrities, deepfakes can cause PR issues for HR; indeed, “employees at all levels of a business’ operations” can be impacted, Adjer says.

“Defamatory deepfakes could be used to sabotage careers or prospective employees, posing challenges to corporate HR teams’ vetting procedures,” he argues.

One can imagine a scenario in which an individual is turned down for a job at a major corporation as a result of manipulated media. Then that person takes to Twitter to drag the company in question for not being able to spot the fake.

Influencer Marketing

While many PR pros may not work at a company with a press-magnet CEO or an entertainment firm that runs publicity for stars, one potential attack vector Adjer raises is that of influencer marketing.

Nearly a week before the doctored Zuckerberg video surfaced, the artist collective responsible posted a deepfake of Kim Kardashian—one of the earliest celebrities to cash in on Instagram influencer marketing—bragging about her vast social-media-sourced wealth.

In this case, the video was recreated using a Condé Nast-owned clip. After the media company filed a copyright claim, the video was removed from YouTube, but not Instagram.

A VICE article recounting the case called copyright claims a Band-aid for companies that deepfakes impact, particularly when manipulated media is a satire or “transformative in nature” and thereby protected as fair use, rather than copyright infringement.

Whether or not your company is ready to take on the legal ramifications of a deepfake scandal, it couldn’t hurt to be prepared from a communication standpoint. The prevalence of deepfakes indicates that it’s critical for the PR sphere to maintain a capability to handle this sort of crisis.

A Deepfake Communications Crisis Checklist

- Evaluate the validity of the deepfake with detection software or an expert opinion.

- Determine how to call the validity of the deepfake into question.

- Include criteria that proves it is a fake.

- Define key audiences internally and externally.

- Craft a response in the spirit of truth and transparency and share it with those audiences.

CONTACT: [email protected]